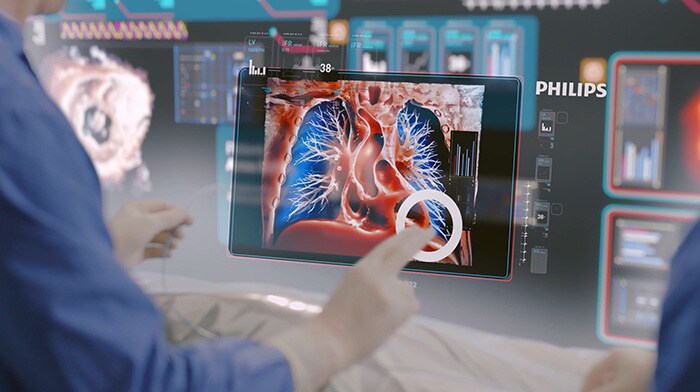

It’s 2033 and all my senses are heightened. I’m in the procedure room with my first patient of the day, John, a seemingly healthy 45-year-old who has been referred for a suspicious spot towards the base of his left lung that was picked up on a routine scan for kidney stones. It’s possibly lung cancer. Through my augmented reality (AR) glasses, I can review and merge all of John’s historical diagnostic CT scans to his actual body. And I see that John has a tiny 4mm lesion. My interventional suite, powered by artificial intelligence (AI), has discovered the shortest and safest path for me to reach the spot, all while minimizing the risk of a lung collapse, or pneumothorax, and without crossing critical blood vessels.

Millimeters count with this type of procedure. Because my hands are fixed holding the catheter and biopsy needle in place, I confirm the path by pushing a virtual button with my eyes: I look at it, turning the button red, and say “activate.” Then I follow the AR virtual path – advancing my miniature catheters, needles and instruments along the safest route – to the lesion, to take a biopsy. Within seconds, the specimen is reviewed by a remote pathologist using tele-pathology and instantly confirmed to be an early-stage lung cancer.

Time to treat. I reach out to a live hologram of John’s lung and start to virtually sculpt a 3D model of the kill zone around the tumor. This is where I plan to destroy the lung cancer cells using microwave energy.

Within just one hour, I can tell my patient that we diagnosed – and destroyed – all of his tiny tumor in one go.

The whole procedure is carried out through a pencil point incision leaving behind just a band-aid. It means that within just an hour, I can tell John that we diagnosed – and destroyed – all of his tiny tumor in one go.

My surgical partner is this highly intelligent room

This is the future of surgery: one single room that acts like my surgical partner. And if you’re a physician – whether you’re a cardiologist, a vascular surgeon, a neuroradiologist, or an interventional radiologist like me – I’m convinced this is where many of us will be working in 10 years’ time. I believe it’s the logical next step for what’s known as image-guided therapy (IGT), which is what I consider modern day surgery, using minimally invasive techniques that allow physicians to do what surgery used to do, and even facilitate new procedures that were never possible before.

Everything I need is in this highly intelligent suite. It’s like having a second set of eyes (after all, the “image-“ from “image-guided therapy” refers to the X-rays and ultrasound that can see inside the body), an extra brain (AI “guided”) and an extra pair of hands (robotics to assist the “therapy”). Together, it’s a seamless mix of photo-realistic AR, AI, robotic-assisted procedural automation, ultra-high resolution 3D medical imaging and smart devices that all talk to one another to give me truly superhuman abilities.

This may sound far-fetched but keep reading: many of the things I’m going to describe to you are being worked on today somewhere in Philips.

And by 2033, we will all need this kind of suite more than ever. With patients getting older and sicker and healthcare funds shrinking globally, workloads are intensifying for physicians and staff. Shockingly, almost half of US doctors and over 60% of nurses report suffering from burnout in 2022 [1]. We can and must do better. A suite like this could help seasoned interventional physicians to do more, more easily. It could also help get younger colleagues up to speed quickly and confidently, and even support physician assistants to take on even more advanced aspects of patient care. Above all, it could help more patients regain their quality of life.

Let’s take a closer look at how.

2033: A day in the life

In interventional radiology, no two days are the same. In 2033 I can carry out 10 or more procedures, compared with around seven today.

Today, my first patient is John…

09:00AM – John, 45, lung cancer

I’m already used to using voice controls to tell my car and phone what to do. Now I have the same capabilities at work too. The room recognizes my voice and hand gestures, and it adapts to me. It pulls up the data I need for John’s procedure. A personalized virtual screen (instead of a bank of a dozen small ones crammed with data) shows me what I need to know. Beside me, the nurse has his own screen with insights about John’s vitals, while the technician nearby has her own data too.

In 2033, the trend of missed or delayed diagnoses, which used to be a leading cause of patient death [2] is starting to reverse. When I first started out in my career, I worked with CT images that looked like a black and white photograph and showed only one object in focus. They could only tell me about the size and shape of a piece of anatomy. If I’d used that type of imaging with John, it would have been very difficult to see or even biopsy his tumor.

Instead, he would have been sent home and told to wait for six months for a follow-up scan. We used to call this “watchful waiting”, which often ends up being a long period of watchful worrying. For John, it would most likely have caused him and his loved ones a great deal of anxiety.

But in 2033, I can see every detail within and around John’s lungs in sharp focus, and also know what the tissue is made out of (we call this material decomposition) which means I can see precisely where the cancerous cells are that need destroying.

The procedure is also much safer for both of us. Instead of using X-ray fluoroscopy to see the tiny devices I use to treat John, I deploy a groundbreaking technology called Fiber Optic RealShape (FORS) Technology that uses light to let me navigate through the body in real-time and in 3D, from any angle. That means no radiation for John, and no need for me and the staff to wear heavy protective lead aprons.

10:00AM – Carlo, 53, blocked artery

After John, I treat Carlo (53) who has a blocked artery in his leg caused by peripheral vascular disease; he’s followed by Sue, who was in a car crash earlier today. We successfully embolize, or stop, her internal bleeding from the spleen and send her to recovery within 45 minutes. After Sue, I see Sara who has non-cancerous fibroids in her womb. Instead of removing her womb entirely – a radical and often traumatic operation for the patient – we can embolize the fibroids in a minimally invasive procedure that enables Sara to recover much faster, both mentally and physically.

12.00PM – Maria, 27, stroke

With John, our first patient of the day my team knew we could take our time if we needed it. But with Maria, my final patient, it’s a medical emergency like Sue. Although she’s only 27, Maria has been diagnosed with an ischemic stroke (in the US, one-third of stroke victims are younger than 65 [5]) and Maria’s is caused by an irregular heartbeat, or atrial fibrillation (Afib), a condition which is widely regarded as a global public health problem; in 2022, 33.5 million people around the world lived with Afib, which also increases the risk of stroke five-fold. Unfortunately, this is exactly what happened with Maria. Yet she is still within the golden window – the earliest hours following a stroke in which patients can have a thrombectomy. This is a minimally invasive IGT procedure where I remove a minute clot no larger than a grain of rice to restore blood flow to the brain and reverse the long-term effects of stroke.

Again, the room adapts to the complexity of the procedure and my needs. I have a device that can navigate itself using robotic-assisted procedural automation through Maria’s arteries. I can place more tools at the tip of that device too – a laser, sensors, ultrasound equipment, a balloon, or a small gripping arm. When I touch the hologram of an artery in Maria’s neck, the device threads itself along the arteries to the clot in seconds. Through my AR glasses I can follow the procedure much more accurately than with my naked eye – as can a group of junior physicians from affiliate hospitals around the world who are all wearing AR glasses showing them exactly what I’m looking at and what I’m doing. It means that they can learn first-hand about the procedure. And after it’s over, a group of doctors in Australia replicate exactly the same procedure on their own with 3D printed modes, taking that old motto “see one, do one, teach one” to the next level.

Maria recovers well. I know that the room’s AI has automatically captured the step-by-step report of my work along the way, which saves me from having to spend valuable time dictating the medical procedure and my findings into Maria’s health record. She’s now being cared for in the neuro ICU by an amazing nursing team – one that’s supported by monitoring solutions that can predict any potential medical emergency before it even happens.

I feel reassured, because Maria will also be wearing an unobtrusive wearable body sensor that will monitor her heartbeat for the next 14 days to detect an abnormal heart rhythm, in the hospital, but also when she ready to go home and be with her family again.

14:00PM – Taylor, 55, angina

At our medical facility, we have multiple suites next to one another to ensure that we can treat as many patients and conditions as possible. In the suite next to Yu and Jennifer, Taylor is being cared for by my interventional cardiologist colleague Joanna. Taylor is suffering from chest pain which turns out to be acute angina, caused by a blockage in their artery that’s limiting blood flow to the heart. They need what’s known as a percutaneous coronary intervention (PCI), which involves making a tiny incision in the wrist through which a catheter carries a stent to open their artery.

PCI used to be a highly complex procedure because it was very hard to identify firstly whether to treat someone at all, and secondly which coronary vessels needed treatment. What’s exciting in 2033, is that before Joanna even touches Taylor, she can practice with a virtual stent and adjust its length and placement until she can predict the optimal configurations for Taylor’s condition. It’s only once Joanna is confident that she’s found them that she begins the procedure.

15:00PM – 17:00PM – Over the next two hours, I treat a liver tumor in Danny, I drain a blocked kidney in Mohammed, and I help an elderly woman called Martha to start moving again for the first time in months by performing something called a vertebroplasty in her lower spine. She walks out of the hospital, pain free.

17:00PM – Xiu, 67, atrial fibrillation

Our next patient Xiu has like my previous patient Maria has atrial fibrillation. With Xiu this has not (yet) caused a stroke, but it does have a great impact on her quality life. Her condition has become very poor and she has to miss out on many of the social activities that gave so much color to her life. In 2033, Xiu is being treated by my electrophysiologist colleague Jennifer in the suite next door.

Jennifer uses the latest 3D imaging, artificial intelligence and robotic catheter navigation technologies to maneuver catheters through the heart with utmost precision, while it is beating. She reaches the target location easily and efficiently, and then destroys the tiny collection of cells wreaking havoc on Xiu’s heart. In doing so, she stops the misfires of electrical activity and returns the tick-tock of Xiu’s heartbeat to normal. In 2023 this intervention could take anywhere from 1.5 to 4 hours. But today Jennifer is able to help Xiu in no more than 30 minutes. And she is also able to assess in real time if her treatment has been successful.

The best part of my day

Perhaps the most exciting thing about the story is that we’re well on our way to enabling Maria’s procedure as well as all the others I’ve just described. As a practicing physician, I find this prospect totally thrilling. Not only because it will make my life, and the lives of my colleagues, much easier. But more importantly because of the impact it will have on people like Maria, Mohammed and John, as well as all their friends and family who care about them. Being able to tell a patient that their procedure has been a resounding success is always the best part of my day – every day.

How do you see the future of healthcare? And how can we bring it to life together. Let me know by reaching out to me on Linkedin.

Sources:

[1] https://www.medscape.com/slideshow/2022-lifestyle-burnout-6014664

[2] Graber ML, The incidence of diagnostic error in medicine, BMJ Quality & Safety 2013;22:ii21-ii27.

[3] Atrial fibrillation and stroke: unrecognised and undertreated. The Lancet. (2016). Available at https://bit.ly/2X2dKgK

[4] The Stroke Association. (2018). State of the Nation report. Available at https://bit.ly/2Q9armK.

[5] Emory University

Share on social media

Topics

Author

Atul Gupta

Chief Medical Officer, Image Guided Therapy Atul Gupta, MD is Chief Medical Officer at Philips’ Image Guided Therapy and a practicing interventional radiologist. Prior to joining Philips in 2016, Atul served on Philips’ International Medical Advisory Board for more than 10 years. Atul continues to perform both interventional and diagnostic radiology in suburban Philadelphia, in both hospital and office-based lab settings. He has been repeatedly recognized as top physician for his specialty in the media and serves on several advisory boards. He has also published and lectured internationally on a range of interventional procedures.

Follow me on